I'm an AI Research Scientist interested in enabling trustworthy autonomy for extreme environments. I work on the Robotics team at Axon.

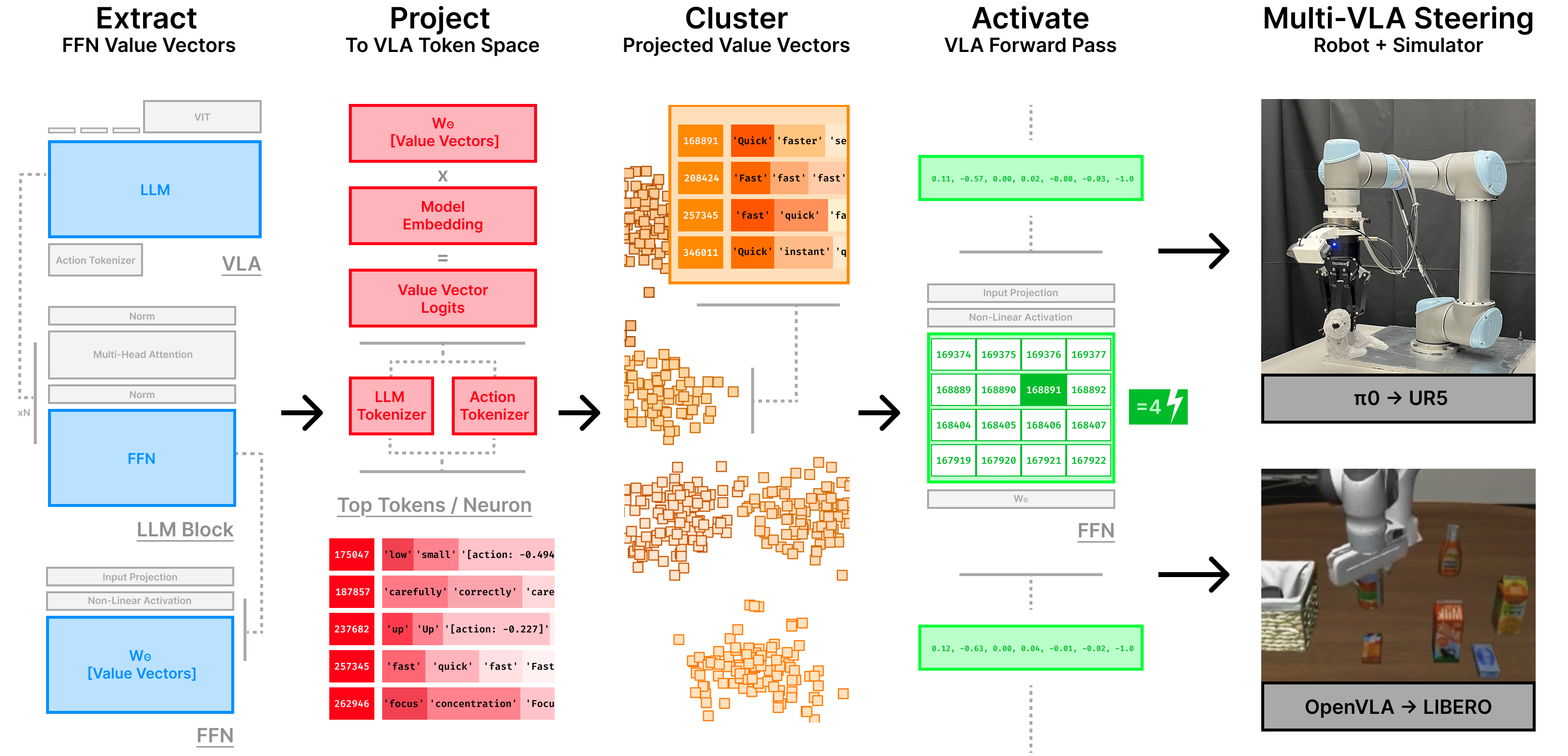

I earned my Master's at UC Berkeley in 2024 with a joint EECS/ME concentration in Robotics and Autonomous Systems. I was supported as a Schmidt Futures Quad Fellow, NSF DToD Fellow, UC CITRIS Fellow and Foresight Institute Fellow – and interned as an AI Engineer at AMD. My graduate research at the EECS Hybrid Systems Laboratory – led by EECS Chair Prof. Claire Tomlin – was supported by DARPA ANSR, NSF SLES, ONR LEARN – and introduced Mechanistic Interpretability for Vision Language Action Models [CoRL 2025].

- Trustworthy Autonomy: Attribution-based protocols to interpret, validate, and steer learned control policies toward safe and aligned behavior.

- Isolated Embodied-AI: ML methods in perception/planning/control to enable single/multi-agent systems without network connectivity/energy resupply.

Orbiting my work in intelligent systems – I reach to disciplines that map contours of societies: economics/history/geography. I was a 2023 AI Safety Fellow at the University of Cambridge and a 2024 Frédéric Bastiat Fellow at the Mercatus Center. I enjoy competitive team sports and currently play ice hockey.